Talk:Closed Form Precision

For the most part the discussion of the Bessel, Gaussian and Rayleigh correction factors is off the deep end. All elementary text books would use (n-1) as the divisor when calculating the standard deviation, but few would name it. The Gaussian correction is small compared to the overall size of the confidence interval with small samples. For measures like group size it is also unnecessary. For small samples the distribution is significantly skewed so you should use tables based on Monte Carlo results which would have the correction factor "built in." By the time you get enough data for group size measurements to be really be normally distributed the Gaussian correction factor is then totally negligible. Herb (talk) 16:36, 24 May 2015 (EDT)

- Perhaps the correction factors could be buried deeper or on another page. But we can't just wave them away and we shouldn't pull mysterious correction factors out of thin air. This is, after all, the closed form analysis. Yes, you can get the same results via Monte Carlo and use that approach instead, but for those who want to see the pure math these terms are part of it. David (talk) 17:56, 24 May 2015 (EDT)

The fact that most of the measures are really skewed distributions deserves more serious discussion. Herb (talk) 16:36, 24 May 2015 (EDT)

- Agreed. Higher moments are noted in a few of the spreadsheets, like Media:Sigma1RangeStatistics.xls, but a discourse would be a worthwhile addition. David (talk) 17:56, 24 May 2015 (EDT)

I must admit a bit of curiosity here. I don't know if the Student's T test has the Gaussian correction built into the tables or not. For small samples (2-5 degrees of freedom) the confidence interval is so large that it wouldn't make a lot of difference, and when doing various error combining to get an "effective" number for the degrees of freedom then having factor built in might mess things up. I looked and can't find the answer readily available. I'll try to remember to ask the question on the math stats forum. Herb (talk) 16:36, 24 May 2015 (EDT)

Contents

σ

The paragraph under heading "Estimating σ" misses the salient points entirely. First there are so many different uses of \(\sigma\) that it is confusing when hopping around on wiki. \(\sigma_{RSD}\) should be used consistently for the radial standard deviation of the population of the Rayleigh distribution. Second \(s_{RSD}\) can't be calculated directly from the experimental data like s can for the normal distribution. You have to calculate \(s_r\) from the data, then use a theoretical proportionality constant to get \(s_{RSD}\). Herb (talk) 16:36, 24 May 2015 (EDT)

- As noted at the top of the page this use of σ is not confusing because it turns out to be the same σ used in the parameterization of the normal distribution used as the model, which is the same as the standard deviation. David (talk) 17:56, 24 May 2015 (EDT)

The discussion about the unbiased Rayleigh estimator is just wrong. I'll admit another limit on my knowledge here. You could calculate \(s_{RSD}\) from either the experimental \(\bar{r}\) or \(s_r\). It seems some combination of the two would give the "best" result. I don't have a clue how to do that error combination. I do know that in general the measurement of \(\bar{r}\) would have a greater relative precision (as % error) than \(s_r\). In fact the ratio of the two estimates could be used as a test for outliers. The standard deviation is more sensitive to an outlier than the mean.

- I don't understand what's wrong: the math is right there in Closed Form Precision#Variance_Estimates and Closed Form Precision#Rayleigh_Estimates. Expand the equations and they are identical. David (talk) 17:56, 24 May 2015 (EDT)

- The point that I am trying to make here, and above in my criticism of the paragraph under heading "Estimating σ", is that there are two different uses of \(\sigma\) connected to the Rayleigh distribution, and the two are not equal.

\(\sigma_r\) is the standard deviation of the mean radius measurement

\(\sigma_{RSD}\) is the shape factor used in the PDF

Also the wikipedia section http://en.wikipedia.org/wiki/Rayleigh_distribution#Parameter_estimation is a bit confusing, but it essentially refers to N dimensions, not N data points in the sample. In our case N=2.

Herb (talk) 22:08, 24 May 2015 (EDT)

- The point that I am trying to make here, and above in my criticism of the paragraph under heading "Estimating σ", is that there are two different uses of \(\sigma\) connected to the Rayleigh distribution, and the two are not equal.

- read this: http://ballistipedia.com/index.php?title=User_talk:Herb#Radial_Standard_Deviation_of_the_Rayleigh_Distribution

Herb (talk) 12:39, 25 May 2015 (EDT)

- read this: http://ballistipedia.com/index.php?title=User_talk:Herb#Radial_Standard_Deviation_of_the_Rayleigh_Distribution

- In order to avoid this confusion I make a point here of suggesting we avoid talking about "radial standard deviation" (i.e., the standard deviation of radii). If we do that then the only σ we're talking about is the one used in the Rayleigh parameterization which (via transformation to polar coordinates) is the same as the σ parameter of the normal distribution associated with this model. If you see any other σ with another meaning please point it out so we can either remove it or clarify if it can't be removed. David (talk) 12:31, 25 May 2015 (EDT)

- Making the simplification doesn't avoid the confusion it creates it. Wikipedia doesn't create unique σ's for every function and use by labeling them with subscripts since there are thousands of cases. We should since the σ in the Rayleigh distribution has other relationships to the distribution that σ in the normal distribution doesn't have.

- As I noted above read this: http://ballistipedia.com/index.php?title=User_talk:Herb#Radial_Standard_Deviation_of_the_Rayleigh_Distribution

Herb (talk) 13:06, 25 May 2015 (EDT)

- As I noted above read this: http://ballistipedia.com/index.php?title=User_talk:Herb#Radial_Standard_Deviation_of_the_Rayleigh_Distribution

- "Radial Standard Deviation" is the problem. If by RSD you mean "the standard deviation of radii" then it is not the Rayleigh scale parameter. But if you introduce the term then people will confuse it with the Rayleigh parameter ... when they're not confusing it with earlier definitions like \(\sqrt{\sigma_h^2 + \sigma_v^2}\). I haven't found a reason to even refer to the standard deviation of radii: it is not used in any calculation or estimation, and it is not a helpful measure given the others in use.

- As for the unlabelled σ: When used as the Rayleigh parameter it is done in the context of the symmetric bivariate normal distribution, in which case every σ is identical. Elsewhere when we talk about non-symmetric distributions, or specific axes, we give it a subscript. Where else should it be labelled? David (talk) 14:03, 25 May 2015 (EDT)

*********************** ***** Eating Crow ***** ***********************

Your calculation for σ in the Rayleigh equation is correct. When you peel back the math, it is simply the pooled average \((s_h + s_v)/2\). That is no doubt the best way to calculate the parameter.

Herb (talk) 20:52, 25 May 2015 (EDT)

Mean Diameter

In the section on Mean Radius the use of the phrase Mean Diameter seems out of whack. Using a "diameter" as a radius is just confusing for no good reason. Seems like you should just use 2\(\bar{r}\). Herb (talk) 16:36, 24 May 2015 (EDT)

- Mean Diameter has come up as an attractive standard, since it corresponds to the 96% CEP which is closer to the measures people are used to than 50% CEP or the mean radius, and rolls off the tongue more easily than "two mean radii" or any other variation I've heard of. David (talk) 17:56, 24 May 2015 (EDT)

Sighter Shots

A number of things are stuffed on this wiki page because they fit some erroneous notion of "Closed form". For example the discussion of sighter shots. That should be a a separate wiki page. The whole thing about the errors for sighter shots also depends on the assumptions for the dispersion for which there are 4 general cases. Herb (talk) 16:36, 24 May 2015 (EDT)

Student's T & Gaussian Correction factor

Asked question on Stack Exchange about if Student's T table has correction included. I'll update this if the question is answered.

- All in all I think mentioning the correction factors are overkill. So I'd recommend just using (n-1) when calculating variance and stop at that. I've read dozens of statistics books where the Student's T test was described. I never saw anything about needing to correct the bias of the sample standard deviation due to sample size. The gist is that if I want to use the extreme spread measure for a 3-shot group to characterize my rifle, then the statistics are abysmally bad. Thus making the "Gaussian correction" won't magically fix that problem. Shooters typically use small sample statistics without realizing how bad that limits analysis. In other words... Buba says "but I measured the 3-shot group to a thousandth of an inch with a $200 pair of vernier calipers. It is 1.756 inches ever time I measure it." LOL

Herb (talk) 13:11, 26 May 2015 (EDT)

- All in all I think mentioning the correction factors are overkill. So I'd recommend just using (n-1) when calculating variance and stop at that. I've read dozens of statistics books where the Student's T test was described. I never saw anything about needing to correct the bias of the sample standard deviation due to sample size. The gist is that if I want to use the extreme spread measure for a 3-shot group to characterize my rifle, then the statistics are abysmally bad. Thus making the "Gaussian correction" won't magically fix that problem. Shooters typically use small sample statistics without realizing how bad that limits analysis. In other words... Buba says "but I measured the 3-shot group to a thousandth of an inch with a $200 pair of vernier calipers. It is 1.756 inches ever time I measure it." LOL

Mean Radius (MR) Section

Mean Radius \(MR = \sigma \sqrt{\frac{\pi}{2}} \ \approx 1.25 \ \sigma\).

**** that is correct. ****

The expected sample MR of a group of size n is

- \(MR_n = \sigma \sqrt{\frac{\pi}{2 c_{B}(n)}}\ = \sigma \sqrt{\frac{\pi (n - 1)}{2 n}}\)

**** This is wrong. This is where "correcting" a sample standard deviation gets you into trouble. The mean radius doesn't depend on sample size. The precision with which the mean radius is measured does depend on sample size. It would be far better to discuss the dependency of \(\sigma_{MR}\) as a function of sample size. ****

- This is a key point that perhaps bears elaboration, and which is why the correction factors get so much attention here: The expected value of a sample does require a correction for the sample size. If you Monte Carlo this (see, e.g., File:SymmetricBivariateSigma1.xls) you will see that right away, and if we don't include the correction factors then the numbers produced by the formulas are just not right – especially for the relatively small n values shooters typically use. Add the correction factors and they match for all n. I think the reason is easy to follow in the case of sample shot groups: Nobody gets to measure their groups from the "true" center. The center itself is estimated from the sample, and it is always closer to the sample shots than the true center.

- So yes, the true Mean Radius does not depend on sampling. But, given a Mean Radius, the expected value of a sample does depend on the number of shots sampled. David (talk)

- No, no, no, no.... This is why I think labeling the various \(\sigma\)'s is important. First, the \(\sigma\) that you're using is presumably from the Rayleigh parameter fitting. For a sample size of N shots, there are (2N-2) degrees of freedom.

- You're correct on Monte Carlo point. You can easily simulate millions of shots which is really impossible at a range. So discuss and make such corrections only in that context. In "everyday" use the corrections just aren't large enough to be significant.

- In the everyday use of the mean radius measurements you'd actually calculate all the \(r_i\) values and average them. The correct way to discuss the variability of that measurement then would be to use \(\sigma_{\bar{MR}}\), the standard deviation of the mean which does depend on sample size. But the value of the mean won't change, just the precision with which it is measured. In other words the "corrected" value for the mean would be well within any "reasonable" confidence interval for the mean.

- The Gaussian correction isn't magic that makes small sample statistics work. Think of it as lipstick on a bulldog. Doesn't make it pretty.

Herb (talk) 15:47, 26 May 2015 (EDT)

- Maybe it's not significant for a lot of real-world situations. Although I think it's important that people know that, for example, on average their 3-shot groups will show a Mean Radius that's only 80% of their gun's true MR. We can tuck these details out of the way where they're distracting, but anyone who wants to check the math has to see them. David (talk) 17:39, 26 May 2015 (EDT)

- You have to realize that a particular mean radius for a 3-shot sample may be low or high. Ideally you like the measurement to be normally distributed so that there was a 50% chance of high and a 50% change of low - on average. But if the ratio gets skewed a bit so that 40% are high and 60% are low, then the skewness is virtually undetectable in real world experiments. There are some subtleties here that perhaps you're missing.

- (1) Did you notice that we may be using two different degrees of freedom here? If a sample is used to calculate the \(\sigma\) of the Raleigh distribution that would be one use. If we later try to predict the mean radius for a different 3-shot group sample, then that would be a 2nd sample. We have to consider degrees of freedom from both.

- (2) The mean radius of a 3-shot group isn't normally distributed. It has a Chi-Squared distribution which is skewed. (Chi-Sqaured uses variance, not standard deviation). You'd need about 10 shots in a group to get a decently normally distributed mean radius. So at about 10-shots per group there would be a "smooth" transition going from the Chi-Squared distribution to the normal distribution approximation. At that point the correction is exceedingly small, and hence rather pointless.

Herb (talk) 19:26, 26 May 2015 (EDT)

- There are a lot of correct statements in here. The complexities of statistics should be spread out for those who want to be as precise and pedantic as possible. And for those who just want a simple tool they can use, or a straightforward answer to a straightforward question, we should endeavor to encapsulate as many of the details as possible.

- With regard to the second statement at the top of this thread: This is the simplest answer to one of the first questions I had when I began looking at this problem, which was, "If I somehow know the precision of my gun then what sorts of groups should I expect to see?" The answer is important for small n because we don't always have the luxury of shooting groups with large n. One can certainly delve deeper, but that is the formula for the first sample moment. If someone wants to work out the formulas for higher sample moments as a function of n that would be great.

- Looking at it now I am thinking that perhaps it would avoid confusion if I prefaced it by saying, "Given σ, the expected sample MR of a group of size n is..."?

- In any case, everything that I've written is in response to a question that I had or was asked. If something is incorrect then we should correct it. If it's not clear what question it answers or what purpose it serves then whoever wrote it should be ready to illuminate that. Hopefully I have at least confirmed that the original statement is in fact correct, and also provided reasonable justification for why it is worthy of note? David (talk) 21:12, 26 May 2015 (EDT)

1 Symmetric Bivariate Normal = Rayleigh Distribution

... being more repetitive: "Symmetric Bivariate Normal = Rayleigh Distribution" This title is misleading as the (univariate) Rayleigh distribution is not the same as a circular bivariate normal distribution. If the shots (in the sense of (h,v) coordinates) follow a circular bivariate normal distribution, then their distances to the true COI follows a Rayleigh distribution.

"In this case the dispersion of shots is modeled by a symmetric bivariate normal, which is equivalent[1] to the Rayleigh distribution" - see above.

How large a sample do we need?

This section could probably use improvement. Here's a rewrite from scratch that attempts to explain it more clearly:

One of the big questions people have is, “How many shots do I have to take to know how accurate something is?”

First, we need to realize, “You can never know for sure.” All you can do is increase your confidence that what you have observed (your sample) reflects what you would continue to observe if you kept shooting (drawing more samples). In order to do this with parametric statistics:

- We establish a model (in this case the symmetric bivariate normal, which is equivalent to the Rayleigh model)

- We figure out how to estimate the model parameter based on a sample that (we assume) is drawn from the model distribution

- We figure out how “confident” we are in a given estimate. (Confidence has a formal definition: Pick an interval about your estimate. Now, if say that we are x% confident in that interval, then we expect that if we repeat the experiment of shooting the same number of shots and estimating the model parameter, then x% of those experiments will result in a parameter estimate within the interval.)

So we’ve gone through this tedious exercise, and we can correctly calculate all these things, but people still want to know essentially, “How confident should I be that a group of n shots is indicative of my accuracy?”

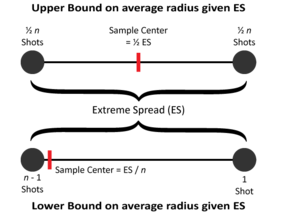

The answer is that it depends on two things: The number of shots, and the particular way those shots land. Perhaps I should construct examples of two groups that give the same parameter estimate but different confidence estimates – something analogous to the one I did to the right for Extreme Spread?

Well, outside of contrived examples in which the sample significantly alters the confidence, people are more concerned with how the number of shots affects confidence. So in order to illustrate that, I hold the distribution of the shots constant. The constant I pick is \(\overline{r}^2 = 2\) because in that case the estimated parameter σ = 1, and the chart in this section is then easy to understand because the confidence interval is a range about the estimated value (y = 1). Now people can get a feel for how confidence increases with sample size. For example, looking at that graph, hopefully people will say, “Dang, fewer than ten shots leave a lot of doubt in the estimate!”

They can also see from the chart that the confidence interval is skewed to the outside of the estimate. I.e., especially with small groups, it’s as likely that the “true” value is much larger than the sample estimate as it is likely that it’s just a little smaller than what the sample indicated. Or, put another way (if you click through to the spreadsheet behind that chart): If you shoot a 3-shot group that suggests your accuracy is σ = 1, all you can say with 95% confidence is that subsequent 3-shot groups will show σ in the range (0.78, 3.74). I.e., you should expect to see a lot larger groups, but not much smaller groups.

David (talk) 11:38, 7 February 2017 (EST)

- David, I'd like to split hairs w/r/t bullet point 3: The technical meaning of a confidence interval to level 95% is the following: If you repeat the experiment many times and each time calculate the confidence interval, then 95% of these intervals will contain the true value. Note that all confidence intervals will be (slightly) different since they are calculated based on different samples. The same is true for the point estimates. This implies that the confidence is technically not in one particular interval, but in the method used to construct the interval. Daniel